September 4th, 2018

OpenStreetMap

#2,300

Big Apple, bad apples

Give them an inch and they’ll take a mile. For all the good that can come of the “Edit” button on open content sites like Wikipedia and OpenStreetMap – disseminating knowledge, giving a voice to marginalized communities, facilitating humanitarian initiatives – anyone deeply involved with such projects can tell you it all just barely works. It’s a daily miracle that the projects haven’t collapsed under the weight of graffiti, spam, and outright lies. To hear it from countless educators, Wikipedia simply can’t be trusted.

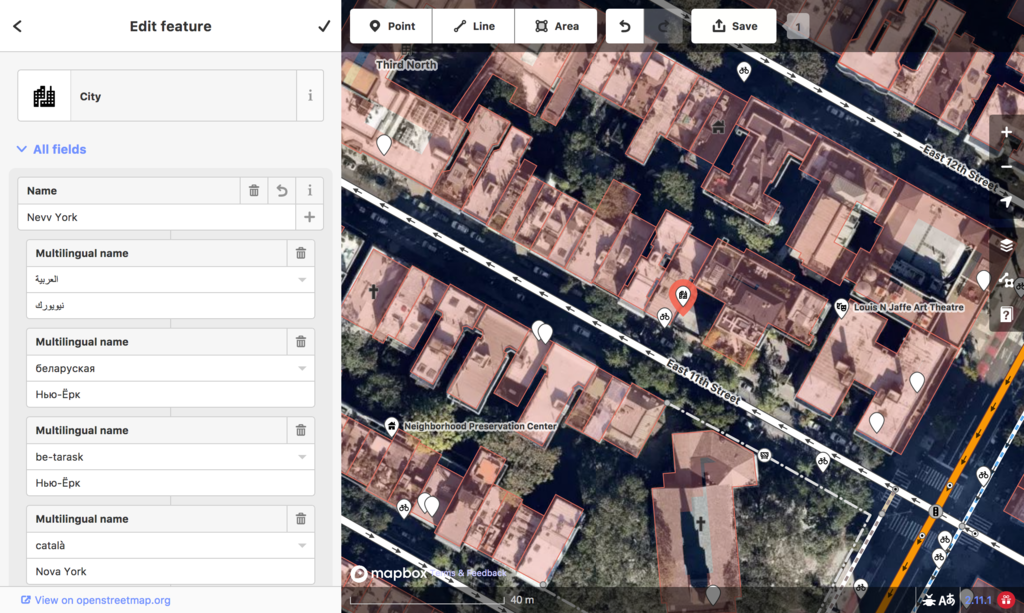

On Thursday, Mapbox and its customers fell victim to very prominent vandalism in OpenStreetMap, in which the label for New York City got renamed to something juvenile and offensive. A few weeks earlier, Wikimedia Maps was also affected by the same act of vandalism, which included numerous slurs, frustrating Wikipedia administrators who felt that OpenStreetMap doesn’t have its act together. Both episodes were painful for me to witness, as a longtime proponent of collaboration between the Wikipedia and OpenStreetMap communities and, obviously, as a Mapbox employee. In the days since, there’s been quite a bit of discussion in the OpenStreetMap U.S. Slack workspace about how OpenStreetMap and the consumers of its data could better prevent or mitigate such attacks. Even someone seeking credit for the attack has joined in with suggestions for improving “security”. True to form, there have been renewed calls for Wikipedia-style article protection or Google Maps–style moderation. But either approach is a poor fit for an open project built on geographic data.

A Wikipedia article can be protected, disabling the “Edit” button for everyone except administrators, quite effectively preventing it from being vandalized. But what would the equivalent be on OpenStreetMap? Protecting a single feature, such as the New York City node, would only invite a vandal to place an asinine node right next to it. (On Wikipedia, you can create a “Nevv York City” article full of junk, but nothing would ever link to it, so the impact would be minimal.) Protecting the area around the New York City node, meanwhile, would deprive that city’s residents of the ability to contribute local knowledge to the project. OpenStreetMap is still incomplete enough that we can’t afford to lock down any portion of the map, not least a fast-changing city full of potential contributors.

The Wikipedia community has always viewed article protection as a public admission of failure, only to be used as a last resort. Given that the project’s slogan is “an encyclopedia that anyone can edit”, why should so many important articles be permanently closed to editing? Wikipedia has tried to replace after-the-fact countervandalism with several different moderation systems. The most recent was finally adopted by the German Wikipedia but was only adopted on a limited basis on a handful of articles at the English Wikipedia. Meanwhile, countless pages remain permanently protected. To say that moderation hasn’t taken off is quite an understatement.

What’s more, instituting a peer review process for OpenStreetMap would entail more than just flipping a switch. While verifying a Wikipedia edit might entail spot-checking cited sources, OpenStreetMap prizes unpublished local knowledge, so to truly review changes and stave off hoaxes could in many cases require in-person visits. Google Maps and Foursquare, two commercial, crowdsourced mapping projects, actively recruit locals to spend all their time curating and groundtruthing, yet they still suffer from rampant vandalism. OpenStreetMap already encourages groundtruthing, but any hard requirement along those lines would either be roundly ignored or lead to the immediate death of the project.

To be sure, there’s more to countervandalism than locking things down. The vandalism that propagated to Wikimedia Maps, then Mapbox, lasted less than two hours on OpenStreetMap before the community reverted the changes and banned the vandal’s user account. Mapbox has deployed increasingly sophisticated tools, including machine learning, for automatically detecting and blocking vandalism that does make it past the OpenStreetMap community. Wikipedia has done much the same for its content to good effect, which is why its administrators found it so frustrating that vandalism would still work its way in via embedded maps.

Last week’s incident was an exception, proving the adage that an adversary only has to get lucky once. That style of vandalism would probably have been caught by Wikipedia’s extensive system of blacklists and abuse filters, which prevent vandals from even saving blatantly bad edits. But a persistent vandal – this one used JOSM, the OpenStreetMap editor so advanced I steer clear of it – will eventually find a way around any blacklist or filter. And if the goal is to reduce the amount of time that a vandal’s work remains on the site, then any solution will also have the undesired effect of helping the vandal learn new tricks faster, like a virus that evolves more rapidly in a Petri dish.

For my part, I think the OpenStreetMap ecosystem places far too much emphasis on bad actions while doing very little to identify and block bad actors. As a Wikipedia administrator for the last 15 years, I’ve seen firsthand the lengths that people will go to evade content-based countervandalism. If you do battle long enough with a persistent vandal, it’s only a matter of time before well-meaning contributors give up due to the inconvenience of avoiding benign words and any article of interest. You can maintain the project’s health much more effectively by targeting the malicious individual.

At any given time, a bewildering number of IP addresses and IP address ranges are blocked from editing either temporarily or permanently. Open proxies are blocked on sight. There are even tools for ferreting out sockpuppets and sleeper accounts and blocking them proactively, along with rigorous accountability to prevent administrators from abusing ordinary users’ privacy. OpenStreetMap needs to adopt similar antiabuse tools based on user identities, if it is to have any hope against the hoards of script kiddies that now realize the map is subject to vandalism.

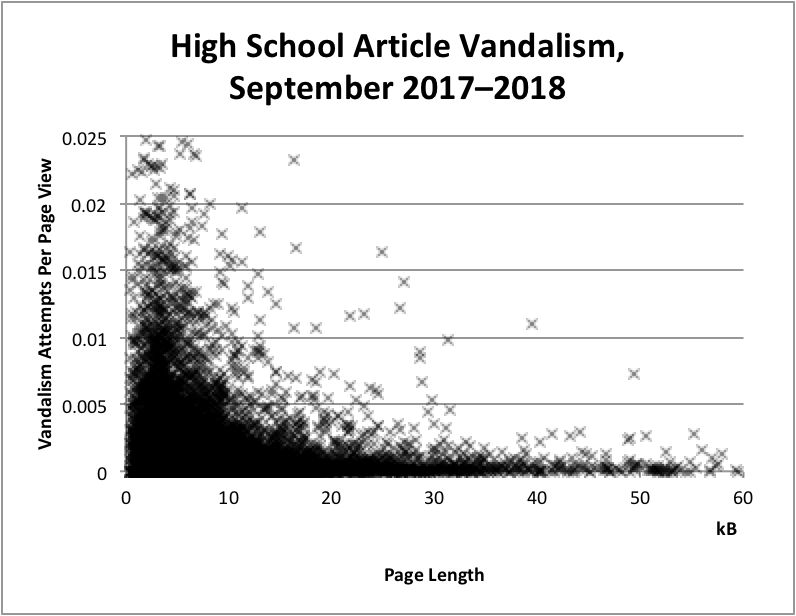

But more than any technical solution, the best approach I’ve found to fighting vandalism requires no software changes at all. OpenStreetMap needs to double down on building the most jaw-droppingly detailed map imaginable. At Wikipedia, articles on uncontroversial subjects suffer from less frequent vandalism as they develop from stubs into detailed articles. You’d think a more complete encyclopedia article would give the vandals more to tear apart, but in fact the opposite is true. I see this trend most clearly with articles about high schools, a favorite target of vain and profane adolescents:

A school article tends to be vandalized by two groups of people: the school’s own students and those of the school’s sports rivals. I suspect the former group is far less likely to vandalize an article that represents their school surprisingly well. I’m unsure why the rival schools’ students also tend to leave the article alone, but I wonder if it’s because the article’s length and detail makes it look like less of a toy to be kicked around. Maybe an article or a map needs a critical mass of credibility to stave off these acts of immaturity. If so, that’s good news for OpenStreetMap, because so many of the community’s efforts – importing building footprints, adding turn lanes and speed limits, refining the cartography of popular map renderers – are primarily about parity with user expectations and thus about building trust with the user.

OpenStreetMap would do well to ignore calls to indiscriminately lock down content or lock out good-faith contributors. Technical barriers to entry are never a sound way to grow a community-oriented project. Instead, with a modicum of well-considered, identity-based antiabuse measures, the project’s contributors can go about their business, drowning out the vandals that already make too much of their dumb luck.

TrackBacks

TrackBack URL: <http://panel.1ec5.org/mt/mt-ping.fcgi/1702>

Comments and Concerns